David Trafimow, Valentin Amrhein, Corson N. Areshenkoff, Carlos J. Barrera-Causil, Eric J. Beh, Yusuf K. Bilgiç, Roser Bono, Michael T. Bradley, William M. Briggs, Héctor A. Cepeda-Freyre, Sergio E. Chaigneau, Daniel R. Ciocca, Juan C. Correa, Denis Cousineau, Michiel R. de Boer, Subhra S. Dhar, Igor Dolgov, Juana Gómez-Benito, Marian Grendar, James W. Grice, Martin E. Guerrero-Gimenez, Andrés Gutiérrez, Tania B. Huedo-Medina, Klaus Jaffe, Armina Janyan, Ali Karimnezhad, Fränzi Korner-Nievergelt, Koji Kosugi, Martin Lachmair, Rubén D. Ledesma, Roberto Limongi, Marco T. Liuzza, Rosaria Lombardo, Michael J. Marks, Gunther Meinlschmidt, Ladislas Nalborczyk, Hung T. Nguyen, Raydonal Ospina, Jose D. Perezgonzalez, Roland Pfister, Juan J. Rahona, David A. Rodríguez-Medina, Xavier Romão, Susana Ruiz-Fernández, Isabel Suarez, Marion Tegethoff, Mauricio Tejo, Rens van de Schoot, Ivan I. Vankov, Santiago Velasco-Forero, Tonghui Wang, Yuki Yamada, Felipe C. M. Zoppino and Fernando Marmolejo-Ramos. Manipulating the Alpha Level Cannot Cure Significance Testing. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2018.00699

Abstract:

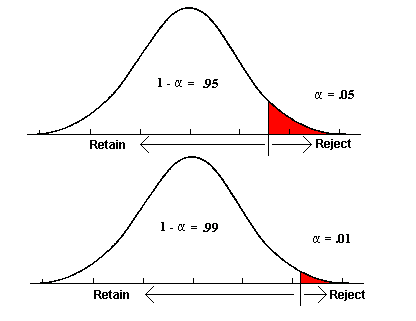

We argue that making accept/reject decisions on scientific hypotheses, including a recent call for changing the canonical alpha level from p = 0.05 to p = 0.005, is deleterious for the finding of new discoveries and the progress of science. Given that blanket and variable alpha levels both are problematic, it is sensible to dispense with significance testing altogether. There are alternatives that address study design and sample size much more directly than significance testing does; but none of the statistical tools should be taken as the new magic method giving clear-cut mechanical answers. Inference should not be based on single studies at all, but on cumulative evidence from multiple independent studies. When evaluating the strength of the evidence, we should consider, for example, auxiliary assumptions, the strength of the experimental design, and implications for applications. To boil all this down to a binary decision based on a p-value threshold of 0.05, 0.01, 0.005, or anything else, is not acceptable.