Abstract:

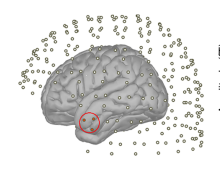

Research on how the brain construes meaning during language use has prompted two conflicting accounts. According to the ‘grounded view’, word understanding involves quick reactivations of sensorimotor (embodied) experiences evoked by the stimuli, with simultaneous or later engagement of multimodal (conceptual) systems integrating information from various sensory streams. Contrariwise, for the ‘symbolic view’, this capacity depends crucially on multimodal operations, with embodied systems playing epiphenomenal roles after comprehension. To test these contradictory hypotheses, the present magnetoencephalography study assessed implicit semantic access to grammatically constrained action and non-action verbs (n = 100 per category) while measuring spatiotemporally precise signals from the primary motor cortex (M1, a core region subserving bodily movements) and the anterior temporal lobe (ATL, a putative multimodal semantic hub). Convergent evidence from sensor- and source-level analyses revealed that increased modulations for action verbs occurred earlier in M1 (∼130–190 ms) than in specific ATL hubs (∼250–410 ms). Moreover, machine-learning decoding showed that trial-by-trial classification peaks emerged faster in M1 (∼100–175 ms) than in the ATL (∼345–500 ms), with over 71% accuracy in both cases. Considering their latencies, these results challenge the ‘symbolic view’ and its implication that sensorimotor mechanisms play only secondary roles in semantic processing. Instead, our findings support the ‘grounded view’, showing that early semantic effects are critically driven by embodied reactivations and that these cannot be reduced to post-comprehension epiphenomena, even when words are individually classified. Briefly, our study offers non-trivial insights to constrain fine-grained models of language and understand how meaning unfolds in neural time.